Randomized Algorithms

Peter Robinson

| Navigation: | Swipe or press space to advance. |

| Controls: | Press for fullscreen, for table of contents, ESC for overview, '?' shows full list of shortcuts. |

Why Randomized Algorithms?

- Randomness is powerful resource for developing efficient algorithms with provable performance guarantees.

- Compared to deterministic algorithms, randomized algorithms are often...

- faster (or use less memory),

- simpler to understand, and...

- easier to implement, e.g. fewer special cases to worry about.

What this Course is about

- How to use randomization to design better algorithms.

- Discuss many applications where access to randomness provides significant benefits.

- Equip you with necessary tools & techniques to analyze algorithms and also other random processes.

- Provide you with a foundation for using probabilistic concepts in your own work.

Topics (tentative)

- Concentration bounds

- Probabilistic data structures

- Fast graph algorithms

- Verification using fingerprinting techniques

- Random walks, Markov chains

- The probabilistic method

- Resilience against adversarial attacks in networks

- Symmetry breaking in networks

- Low-memory algorithms; dealing with dynamically-changing data

Marking Scheme (tentative *)

- Homework assignments (40%)

- Presentations of a research or survey paper (20%)

- Reviews of peers' presentations (5%)

- Final project (35%): choice of systems-focused or theory-focused

Prerequisites

- Knowledge of data structures & algorithms (undergrad level) is recommended... but most parts of the course are self-contained

- Undergrad-level discrete mathematics: discrete probability, basic knowledge of graph theory and combinatorics (e.g., how many ways can we choose an object such that...?)

- Basics of the Big-O notation (e.g., being able to figure out the meaning of , , etc.)

- Being able to write simple programs in <insert your programming language of choice here>

- Unsure if this course is suitable for you? Come talk to me.

Resources

- Material is loosely based on: Probability and computing: Randomized algorithms and probabilistic analysis by Michael Mitzenmacher and Eli Upfal. 2nd edition, 2017. Cambridge University Press. (Not required but recommended.)

- Various resources on the web; to be added as we need them.

What are Randomized Algorithms?

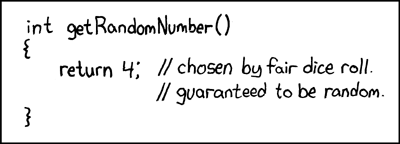

(1) Algorithms that make random choices during their executions. Use random number generator to decide next step.

(2) Algorithms that execute deterministically on randomly selected inputs.

Which interpretation is standard?

[Click to see answer.]

Roadmap for Today

- Verification of Matrix Multiplication

- Fast Min-Cut Computation

- Some techniques & tools along the way

Probability Space & Probability Axioms

- a sample space : set of all possible outcomes

- the set of events ; for discrete prob. space .

- the probability function

- for all finite or countably infinite sequences of mutually disjoint events , it holds that .

The RAM model

We analyze time complexity in the Random Access Machine model.

- Single processor, sequential execution

- Each simple operation takes 1 time step.

- Loops and subroutines are not simple operations.

- Each memory access takes one time step, and there is no shortage of memory.

Application: Verifying Matrix Multiplication

The Problem

Input: Given three matrices , , and ; entries are rational numbers.

Goal: Algorithm that verifies whether ; answers either "yes" or "no"

- First Attempt: "Verification by Computation"

- Compute and compare result with .

- Problem: standard matrix multiplication algorithm requires time. More sophisticated algorithms still take .

Tool: Sampling Uniformly at Random

- Example - "Sampling a random bit":

- . Variable contains either or with equal probability.

- Sampling bit takes unit of time in RAM model.

Algorithm for Verifying Matrix Multiplication

- Steps:

- Sample bits u.a.r. and store them in vector .

- Compute .

- Compute , so .

- Compute

- if then output yes, else output no.

- Time:

Total running time:

Conditional Probability

Useful consequence:

To simplify notation:

Independence of Events

- We can simplify probability terms when conditioning on independent events:

Do we require ?

Yes: is clearly dependent on .

Tool: Law of Total Probability

Proof:

Correctness of Algorithm

- Deterministic algorithms either work or they don't.

- Not necessarily true for randomized algorithms!

Two things could go wrong:

- Algorithm outputs no but correct answer is yes:

- Happens if and .

- But, if , then also , for any . So, this can't happen here.

- Algorithm outputs yes but correct answer is no:

- Happens if and .

- This case is a bit trickier...

Example

- Let's look at instance where and .

- Suppose we sample .

- and Algorithm will output "no". Correct!

- Now suppose we sample .

- and Algorithm will output "yes". Error!

- Algorithm detects only for "good" choices of . Can we quantify probability of sampling good ?

Proof - High-level:

- Define . Then .

- Let . Algorithm errs if .

- For , it must be that .

[Details]

We just focus on the 1st row of multiplied by . The same is true for all other rows of too but it's not important for our purpose. - Let's assume we sample bits in order .

- After sampling : right-hand side of (1) is already fixed!

- Since is u.a.r sampled from , probability that (1) holds .

Formal details of the Proof...

- We've seen that:

- (1) if , then algorithm is correct with probability ;

- (2) if , then algorithm is correct with probability .

- This result doesn't seem very useful!

- Let's look at how to improve this.

Boosting the Probability of Success

- Repeat the following times:

- Run randomized verification algorithm

- If result is "no" break loop.

- Output last result of randomized verification algorithm

- .

- For , this is .

- For , succeeds with high probability (w.h.p.), i.e.: .

- Works because error is one-sided: always correct if .

Verifying Matrix Multiplication - Wrapping Up

Similar fingerprinting techniques have many applications (string equality verification, etc.)

Application: Finding the Minimum Cut in a Graph

The Min-Cut Problem

- Consider connected undirected multigraph with vertices and edges.

- A cut of is set of edges that disconnect if we remove them.

- Goal: output the min-cut, which is a cut of minimum size.

- Real-world applications: reliability of supply or computer networks

Simple Randomized Min-Cut Algorithm

Min-Cut Algorithm

Repeat until only vertices left:

- Sample edge u.a.r. from available edges

- Contract

- Remove self-loops but keep other multi-edges

Example

- Min-cut .

Tool: Chain Rule of Conditional Probability

-

Proof: Inductively resolve conjunction of conditioned events

Tool: Union Bound

Proof:

- Follows by induction.

- To see intuition, just consider case :

Analysis of Min-Cut Algorithm

- Algorithm works as long as no min-cut edge is contracted

- Since the min-cut is small by definition, likely to succeed!

- What's the probability of sampling a min-cut edge?

Proof:

- Conditioned on , there are vertices.

- Suppose min-cut has size . Then still edges left.

- .

- .

- Success probability is determined by .

Min-Cut: Wrapping Up

Proof:

- 1. Probability of Success:

- Outputs is edge-set that is always

some cut, i.e., one-sided error. - Repeat algorithm times and output smallest set found.

We used inequality . - Outputs is edge-set that is always

- 2. Time Complexity

- iterations of sampling & contraction in base algorithm

- Sampling and contracting random edge takes

- We repeat base algorithm times

- In total: steps