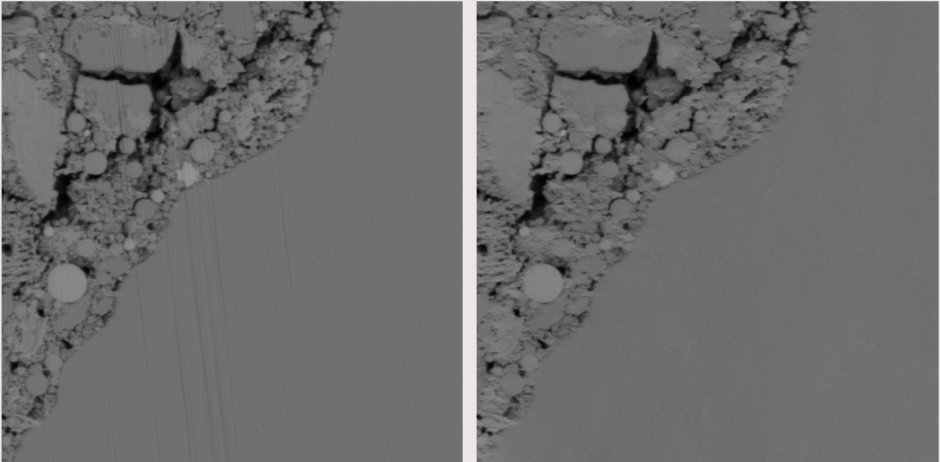

Correction of Multi-Angle Plasma FIB SEM Image Curtaining Artifacts by Fourier-based Linear Optimization Model

By Christopher Schankula, supervised by Dr. Christopher Anand† & Dr. Nabil Bassim

17 August 2017

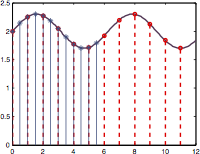

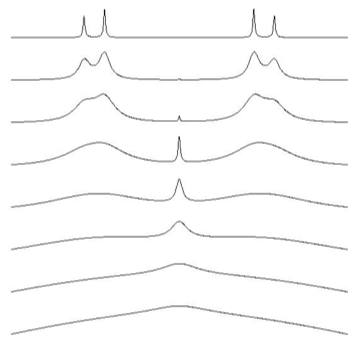

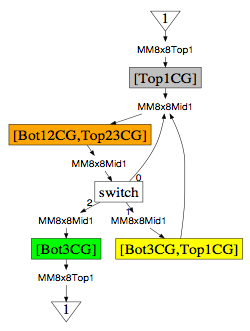

Focused Ion Beam Scanning Electron Microscopy (FIB SEM) is a block face serial section tomography technique capable of providing sophisticated 3D analysis of higher order topology such as tortuosity, connectivity, constrictivity, and bottleneck dimensions. A rocking mill technique is used to mitigate “curtaining” artifacts caused by differences in phase density and milling rates, creating the straight-line artifact at two discrete angles.

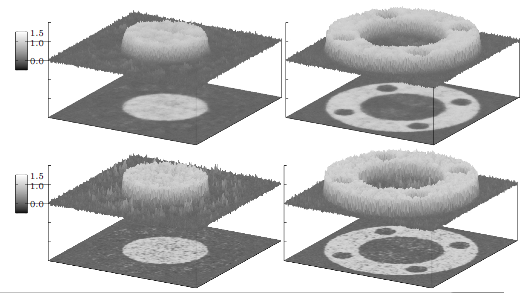

Our method effectively corrects multi-angle curtaining, without greatly modifying the image histogram or reducing the contrast of voids. Compared to other methods, our method does not introduce new, incorrect structure into the image. Ongoing work aims to improve the computational efficiency of the algorithm, taking advantage of its “embarrassingly parallel” nature. Future work includes exploring the benefit of a comprehensive model of curtaining which leverages rich knowledge about their physical properties. Simultaneous secondary electron images can provide better contrast for the curtains, which may prove useful in improving their detection and correction.

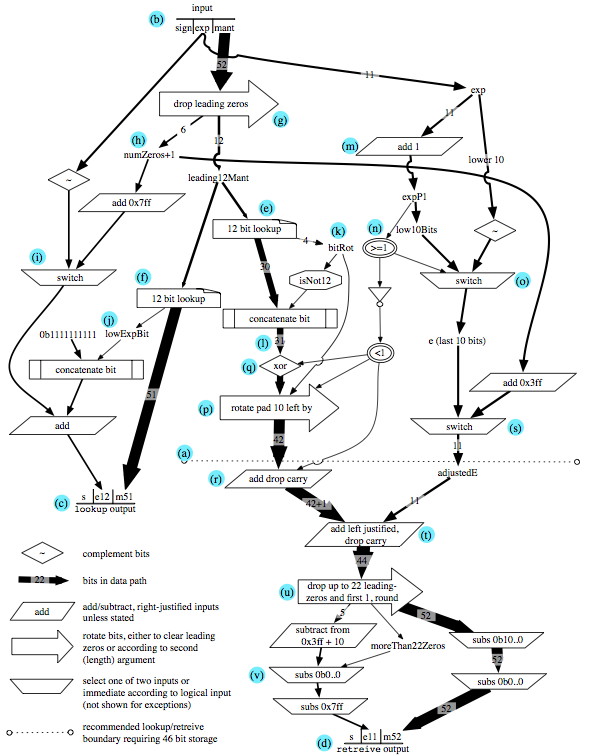

Read the Poster.